Moore’s Law (transistors per chip) and Hendy’s Law (pixels per dollar) have been useful predictors of where processing power and digital photography were going. Something similar would be really useful for 3D printing. I tried to plot a law for the quality of print per dollar of 3D printers for an article I have been working on for the McKinsey Quarterly, but I don’t have the data. What I want to plot is something along these lines: quality (lower microns etc. + multi-materials) improves at the same cost every X months/years. Plotting this would help people plan for, and benefit from, the disruption of 3D printing.

Moore’s Law (transistors per chip) and Hendy’s Law (pixels per dollar) have been useful predictors of where processing power and digital photography were going. Something similar would be really useful for 3D printing. I tried to plot a law for the quality of print per dollar of 3D printers for an article I have been working on for the McKinsey Quarterly, but I don’t have the data. What I want to plot is something along these lines: quality (lower microns etc. + multi-materials) improves at the same cost every X months/years. Plotting this would help people plan for, and benefit from, the disruption of 3D printing.

I need help to do this: I have setup a Google spreadsheet that anybody can contribute data points to at https://spreadsheets.google.com/spreadsheet/ccc?key=0AvV-pHeoX7ZYdG1OQkVNRVFnTEZLd3NoVUdHMTBIS2c&hl=en_US. The data I need are the following:

At least one machine per year (where possible, at the low end of the market) showing:

- the resolution in microns (1/1000 of a millimetre, or 0.001mm) that it could achieve, and,

- where relevant, the materials that the build machine works with. In particular (i) how many materials of different properties (including colours) can the machine print in, and (ii) the degree to which they can be blended,

- Update 25/Aug/2011 – additional data requirement: Speed (mm per sec),

- Update 25/Aug/2011 following suggestion from Ulf Lindhe – cost of materials. I express this as material cost per gram of printed output.

It will also be important to have the price per unit (this may include supporting equipment necessary to operate the machine). In general, the machine should be at the lower price end, rather than a high price innovation.

This is intended to gather enough data to develop a rough starting point. In an area with such diverse technologies (In the first three years of the 1990s alone, Fused Deposition Modeling (FDM), Solid Ground Curing (SGC), Laminated Object Manufacturing (LOM), Selective Laser Sintering (SLS), and Direct Shell Production Casting (DSPC) were commercialized!), it will be difficult to come up with a sensible graph – as Joris Peels rightly Ed Grenda warned me.

–Update 25/Aug/2011. This is a useful reference http://www.additive3d.com/3dpr_cht.htm

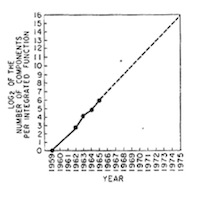

The best example of where this kind of predictive guess work is Gordon Moore’s 1965 article in Electronics, v. 38, No. 8. Moore, then at Fairchild Semiconductor, plotted the growth in chip complexity from 1959 to 1965 and projected that the number of transistors per integrated circuit would double every 12 months.

“If we look ahead five years, a plot of costs suggests that the mini- mum cost per component might be expected in circuits with about 1,000 components per circuit (providing such circuit functions can be produced in moderate quantities.) In 1970, the manufacturing cost per component can be expected to be only a tenth of the present cost.

The complexity for minimum component costs has increased at a rate of roughly a factor of two per year (see graph on next page). Certainly over the short term this rate can be expected to continue, if not to increase. Over the longer term, the rate of increase is a bit more uncertain, although there is no reason to believe it will not remain nearly constant for at least 10 years. That means by 1975, the number of components per integrated circuit for minimum cost will be 65,000.”

Moore updated this in 1975, correcting predicting that from that year on the number of components per chip would double every two years.

Another useful example is Hendy’s Law, which established a consistent trend in digital camera technology toward higher pixel count per dollar in 1998. Barry Hendy, then Manager for Kodak Digital Imaging Systems for the Asia Pacific Region, plotted the pixel count of Kodak’s most basic models in Australian Dollars. He used pricing data from 1994 onward, and plotted the prices on logarithmic Y axis to show the linear rise per year.

With only four years data, he plotted the future. 3D printing will create massive opportunities. But it will also disrupt many businesses. To get the best out of this transition we need to be able to plan for it properly. So please contribute any data you can find to https://spreadsheets.google.com/spreadsheet/ccc?key=0AvV-pHeoX7ZYdG1OQkVNRVFnTEZLd3NoVUdHMTBIS2c&hl=en_US.

Johnny Ryan

(@johnnyryan / https://johnnyryan.wordpress.com)

Thanks to i.materialise, Fabaloo, and Ponoko for posting this on their blogs.

Excelent idea.

For home use, rather then buisness, how easy it is to recycle the materials would also be a decideing factor. (not sure if any actualy do yet – but I know Id sure jump at buying one where I can dump old/failed models back into something for reuse)

I don’t think resolution is a very good metric for Additive Manufacturing(AM). It’s hard to quantifiably define resolution across all additive manufacturing processes. Sure you could use pixel size, but this would only apply to pixel based processes. You could use layer thickness, except this would not apply to layerless processes. Even if you just consider pixel based processes, pixel size and layer thickness aren’t a very good metrics. It’s possible to make features that are smaller than the pixel size and layer thickness by careful control of process parameters.

Your best bet at measuring the quality of AM is to have benchmarking parts made, 3d scan them, and measure quality by how much the scan differs from the CAD model. To get useful data you’d need to have many different parts made on different machines of the same make and model, as part quality varies from part location in build chamber, build to build, machine to machine, and operator to operator. Unfortunately, this would be a very time consuming and expensive proposition… Even then, it would be hard to design a benchmarking part that would be useful across a wide set of machines, especially with machines having build chambers that range from the size of a large apartment to cubic millimeters. Benchmarking and developing standard metrics for AM happens to be an active area of research…

Speed in mm/s isn’t a useful metric, as it is only useful for quantifying point scanning processes(IE, scanning a laser point across a surface). With line(scanning a line of inkjet nozzles across a surface) and area based processes(using a DLP projector to cure photopolymer), which happen to be gaining popularity, it is completely useless. It might be more correct to speak in terms of deposition rate or kilograms of material deposited per unit time. Of course material deposition rate is often variable on many machines, so one can build faster at the expense of resolution.

On the cost of materials, do you mean the cost of virgin material or the cost of actually making something in a machine? For some processes, like laser sintering(LS), a percentage of material from a previous build(in LS the powder degrades each time it is recycled) is mixed with virgin material to decrease the cost of actually making stuff from the machine. Thus, the cost of making stuff in a machine can differ greatly from the cost of virgin material.

One factor you should take into account is surface roughness before post-processing, as it happens to be a good way to measure quality. Surface roughness of AM’d parts tends to be higher than parts fabricated by traditional manufacturing due to pixellation and stairstepping( on curved/diagonal surfaces, layers tend to look like stair steps). Though, it’s likely that surface finish will not continue to exponentially improve after it reaches the point of traditional manufacturing. Indeed, some researchers are already churning out parts with the stereolithography process that have surface finishes on par with injection molding…

You also might want to consider build chamber size, overall build chamber size seems to be increasing. Though you might run get some funny data, every once in a while some companies build monster size machines, like the apartment size one mentioned above. Though you might find some way of scaling build chamber size with resolution, as these monsters don’t tend to have very high resolution.

So to get something like a Moore’s law metric you might want something like a dimensionless factor that takes all of these into account like the build chamber size/resolution factor mentioned above.

Hi – thanks for this.

Great comments. I have been thinking that I might need to make charts for each technology, and see if these conform to any Moore’s Law sort of plot. Point taken about layer thickness and build speed – the problem I’m finding is, with build speed anyway, that manufacturers tend to give these data not in grams deposited, but in mm build (horizontally, or vertically).

Really good point about virgin materials.

The problem is, for a Moore’s Law, something really simple, like components per transistor, or pixels per dollar, is needed. Pixels alone do not correspond to quality, nor to components. There are other elements of design that can improve performance but which these laws do not attempt to allow for. So, what in your view is the most simple metric to use? I think there must be one, and it may be that only when we have the data we can see what it is.

Johnny

hello. why dont u use data already collected by wohler in wohler’s report ? please contact me on my email. all the best, o.

Good idea – I have asked Terry for his input, but as the leading consultant in the industry he may not be able to put the data he has gathered out there in the open.

I work for a 3D printing toys company, and we are looking at the historical cost of 3D printing. For example, how the cost of 3D printing is decreasing over the materials. We are not looking at the cost of 3D printers, but the actual cost of printing. By any chance do you have this data/information. Thanks!

I think Terry Wohlers might have those data http://www.wohlersassociates.com/technical-articles.html